Does AI perceive and make sense of the world the same way humans do?

Advertisement

artificial intelligence (AI) is becoming increasingly important and is already present in many aspects of our daily lives—but does AI perceive and think about the world the same way we humans do? To answer this question, Max Planck researchers and members of the Justus-Liebig-Universität Gießen Florian Mahner, Lukas Muttenthaler and Martin Hebart investigated whether AI recognizes objects similarly to humans and published their findings in the journal Nature Machine Intelligence. They developed a new approach that allows to clearly identify and compare the key dimensions that humans and AI pay attention to when seeing objects.

“These dimensions represent various properties of objects, ranging from purely visual aspects, like ‘round’ or ‘white’, to more semantic properties, like ‘animal-related’ or ‘fire-related’, with many dimensions containing both visual and semantic elements.”, explains Florian Mahner, first author of the study. “Our results revealed an important difference: While humans primarily focus on dimensions related to meaning—what an object is and what we know about it—AI models rely more heavily on dimensions capturing visual properties, such as the object's shape or color. We call this phenomenon ‘visual bias’ in AI. Even when AI appears to recognize objects just as humans do, it often uses fundamentally different strategies. This difference matters because it means that AI systems, despite behaving similarly to humans, might think and make decisions in entirely different ways, affecting how much we can trust them.”

For human behavior, the scientists used around 5 million publicly available odd-one-out judgments over 1,854 different object images. For example, a participant would be shown an image of a guitar, an elephant, and a chair and would be asked which object doesn’t match. The scientists then treated multiple deep neural networks (DNNs) that can recognize images analogous to human participants and collected similarity judgments for images of the same objects used for humans. Then, they applied the same algorithm to identify the key characteristics of these images – termed “dimensions” by the scientists – that underlie the odd-one-out decisions. By treating the neural network analogous to humans, this ensured direct comparability between the two. “When we first looked at the dimensions we discovered in the deep neural networks, we thought that they actually looked very similar to those found in humans.”, explains Martin Hebart, last author of the paper. “But when we started to look closer and compared them to humans, we noticed important differences.”

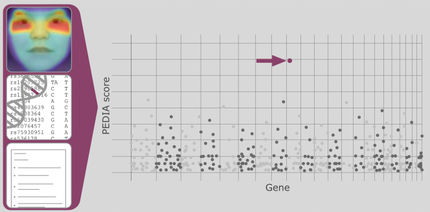

In addition to the visual bias identified by the scientists, they used interpretability techniques common in the analysis of neural networks for judging whether the dimensions they found actually made sense. For example, one dimension might feature a lot of animals and may be called “animal-related”. To see if the dimension really responded to animals, the scientists ran multiple tests: They looked at what parts of the images were used by the neural network, they generated new images that best matched individual dimensions, and they even manipulated the images to remove certain dimensions. “All of these strict tests indicated very interpretable dimensions.”, adds Florian Mahner. “But when we directly compared matching dimensions between humans and deep neural networks, we found that the network only really approximated these dimensions. For an animal-related dimension, many images of animals were not included, and likewise, many images were included that were not animals at all. This is something we would have missed with standard techniques.” The scientists hope that future research will use similar approaches that directly compare humans with AI to better understand how AI makes sense of the world. “Our research provides a clear and interpretable method to study these differences, which helps us better understand how AI processes information compared to humans.”, says Martin Hebart, “This knowledge can not only help us improve AI technology but also provides valuable insights into human cognition.”